Your CPU and modern games

Today I'm going to explain CPU requirements and multi-threaded demands

of today's games to clear up some confusion. I keep hearing many people

come here and say how they

were told they need a quad core to play Skyrim and Battlefield 3 which is totally preposterous. Among other games, this may change but these two were the ones that kept

popping up. Keep in mind, some games can utilize 4 cores but it isn't 100%, and no game can utilize more than 4 cores. To clarify, just because you see blips of activity on

all of your cores it doesn't mean the game is utilizing it. That is just Windows balancing loads as best it can.

To get a bit technical, people confuse core count and GHz (speed) with how fast a CPU really is. The CPU architecture plays the biggest role in its processing power. More

cores and more GHz doesn't mean everything. For those of you that remember circa 2002, the Pentium 4 was clipping the 2.5-3GHz range while the AthlonXP chips were stock

below 2GHz. Yet, the Athlon chips seemed to excel over these Pentiums simply because their cycles per clock were faster.

What is cycles per clock? It is how many instructions can be processed per clock per core. The faster the GHz, the more instructions can be processed. Please do not confuse

this with "how many things can be done per core" because it isn't that.

Let us continue, today we have the top dogs of AMD FX 8170, the Intel Core i7 3770k, and the Intel Core i7 3960x. The 3770k is of the 1155 (mainstream) platform and based

on the 3rd Generation Ivy Bridge core. The Intel Core i7 3960x is of the 2011 (Enthusiast) platform and based on the 2nd Generation Sandy Bridge Extreme core. The AMD FX is

of the AM3+ package based on the Family 15h Microarchitecture.

Most people want to believe that the 8 core 3.9GHz AMD monster will make the 3.5GHz 4 core 3770k succumb to its will. That is utterly incorrect. Currently, the Bulldozer

architecture has comparable performance to the first gen Core i CPUs, or rather the i7 920 era, due to low IPC, slower IMC, slower single threaded performance, and it's

module build not being up to par. Actually, due to all of this gaming performance is actually better on the Deneb/Thuban line up (Phenom 2 965/1100t). Because of this they

ramp up clocks to try and make up for performance loss, but in single threaded apps the FX processors lack, which would be your games. The Sandy Bridge Architecture is

roughly 12-15% faster clock for clock compared to Nehalem, and Ivy Bridge roughly 10-12% faster than Sandy Bridge. Where does this put Bulldozer (FX)? 2 gens behind in

performance or roughly 25% slower in games compared to current Intel CPUs.

So you're probably wondering why I haven't mentioned SB-E yet. Well, for starters the only affordable CPU in that line up is the 3820 which is bested in games by the SB

2600k. As I mentioned before, more cores doesn't mean more performance. So in literal sense, there is no point in getting a 3930k or 3960x for gaming.....period. Don't let

terms like quad channel RAM or "extreme" fool you. They don't make a difference in games. Don't believe me? Look at my sig, I speak with experience. So for now, we can

forget this CPU line up since this thread is about gaming.

Moving along, the point of this thread is to show you with graphs and non-fancy words why you don't need more cores for current games. It is also to give you people wanting

to upgrade a feel of where you stand with your current CPU. To see if you need to upgrade that, or if you simply need more GPU horsepower. My main concern as I mentioned

before will be Battlefield 3, and Skyrim. So if you're here looking for benchmarks for other games I hate to disappoint. Besides, these 2 are some of the most demanding and

demanded games. If you have a game in question, more than likely you can judge performance based on these graphs.

These CPUs I will be testing judging performance will be:

Core i7 3960x (2 and 4 core)

Core i5 2500k (2 and 4 core)

Core i5 750 (2 and 4 core)

Core 2 Quad Q8200

AMD AthlonX2 6000+

Unfortunately I currently don't have an i3 from any gen with me at the time but you can guesstimate a gen 1 i3 will be comparable to the 2 core i5 750 benches. I also wish I had a Phenom 2 Quad to throw in there, but the one I "have" is currently sitting in somebodies machine who isn't talking to me.

Each CPU will be tested at stock because I feel that most people who need this thread probably wont overclock. I feel that reviewers who overclock their rigs to show game

performance in tests like these need to re-evaluate what exactly they are trying to show, and who their target audience is. It isn't like benchmarking to find maximum

performance for a new product. Each game will be set to Ultra settings (as always asked for) and at 1920x1200 resolution. Please understand, that by Ultra I mean every in

game setting set as high as it can go but I will NOT be using any extra eye candy such as Anti-Aliasing. Base GPU will be an AMD Radeon 5850. So lets get started.

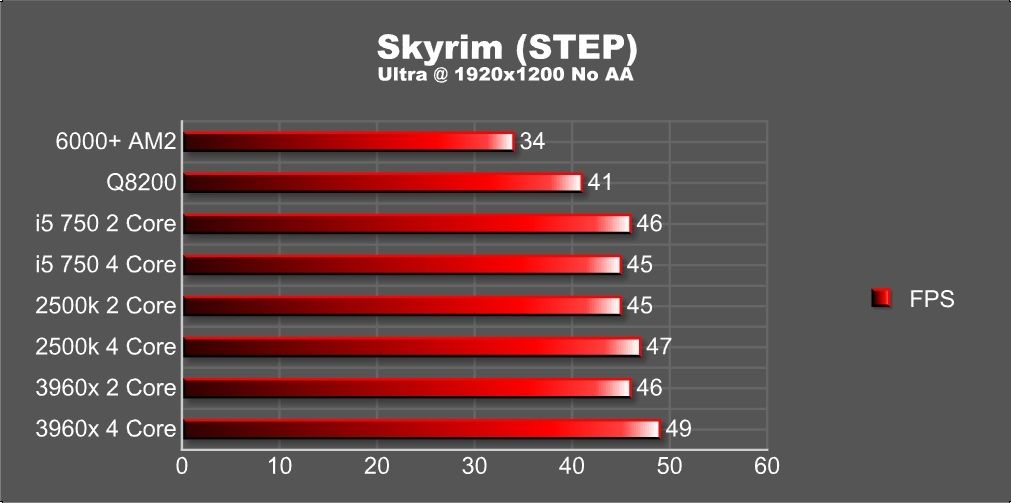

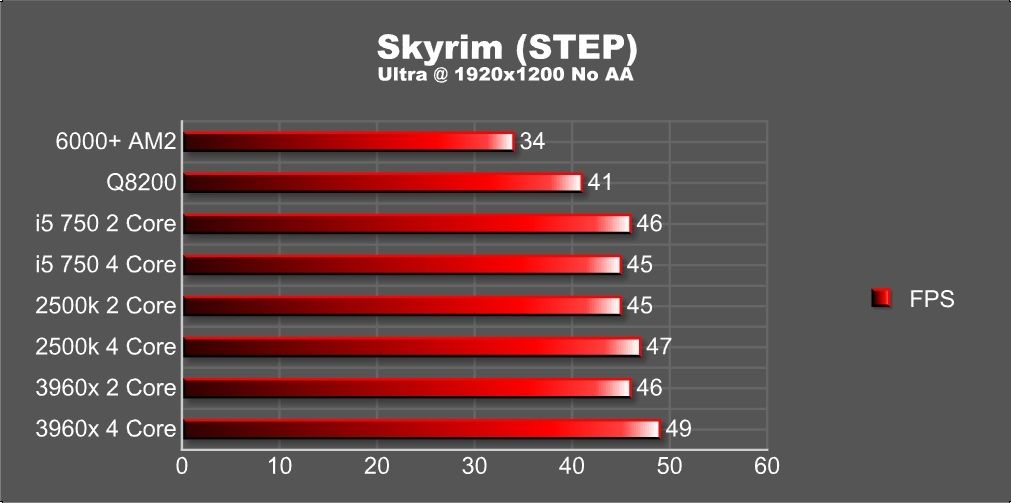

In Skyrim I will be recording information from the very beginning of the game. It is the most likely time when everything will be exactly the same. Take note, in Skyrim the

most demanding areas will be heavily wooded and areas with a lot of action going on. For example, a dragon fight with many NPC's attacking it with magic. I will also take

this time to mention that my copy of Skyrim is heavily modded. The only mods I use are HD texture mods and no story or game modifiers. To be specific, all the extreme

options you can get off of STEP which can be found over at Nexus. My copy is about 14GB in size, where your vanilla copy will be between 6 and 7GB just to give you an idea

of how large these mods are. With that being said, my copy will tax these CPUs (and more importantly my GPU) more than your copy. I estimate vanilla will be about 15%

faster or more than my copy depending on the CPU in question.

As we can tell from the benchmarks here my copy of Skyrim is really taxing my GPU. The thing to mention here though, is that between 2 generations of CPUs there isn't much

of a difference between them, and 2 or 4 cores. Even the Core 2 Quad is hanging in there with admirable performance granted its age. I think if I didn't have so many high

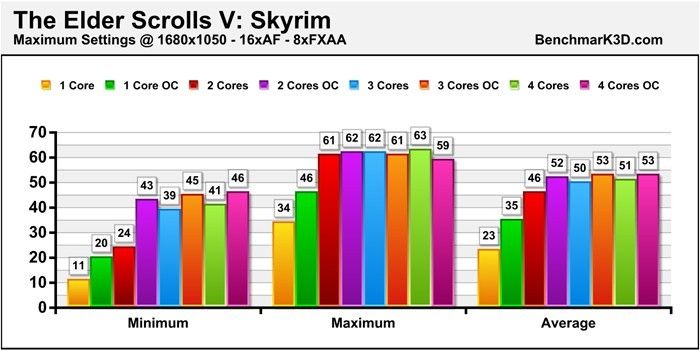

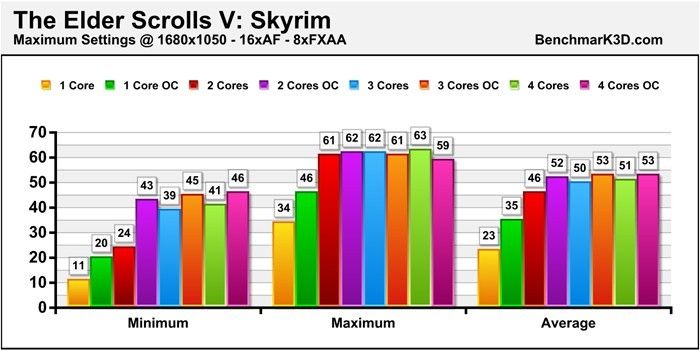

res texture mods I think the 6000+ would even be quite playable despite its age as well. Next up I borrowed a few pics from another site that shows the same thing. Only

difference here, is they are using a lower resolution which actually adds more stress to the CPU. I'll come to that topic later though.

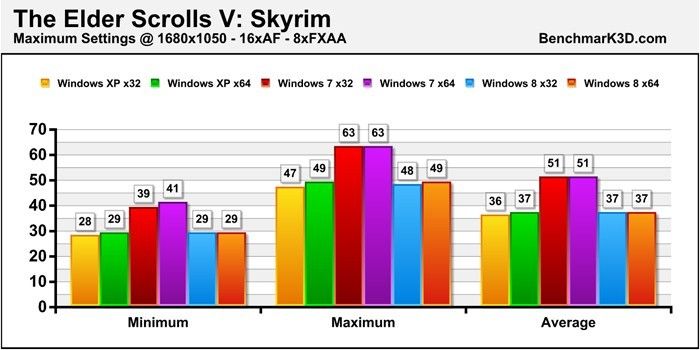

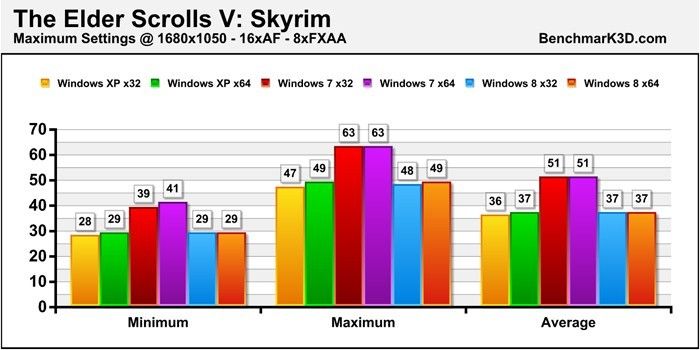

Their results are similar to mine despite the circumstances and settings used. This next picture is for all of you XP users. It is pointless to hang on to the aging OS and

this proves it.

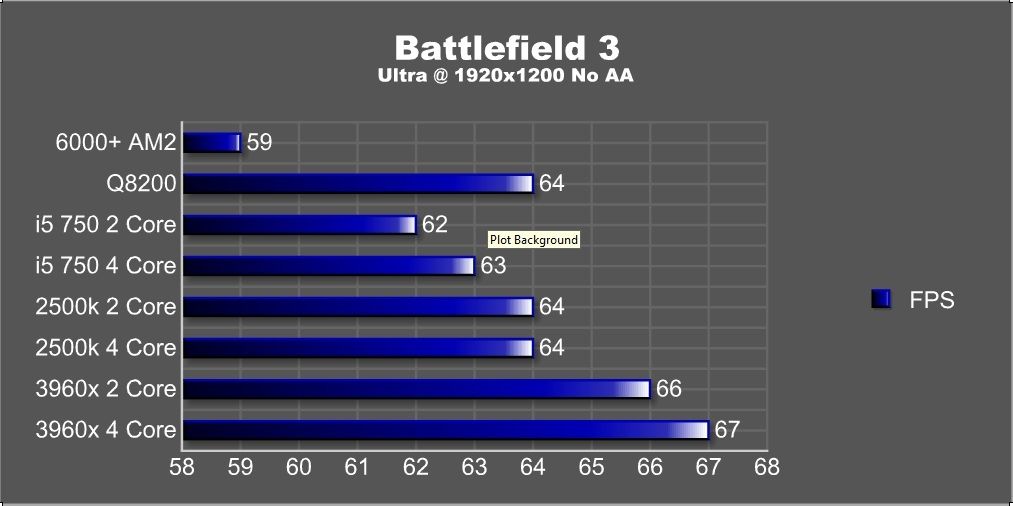

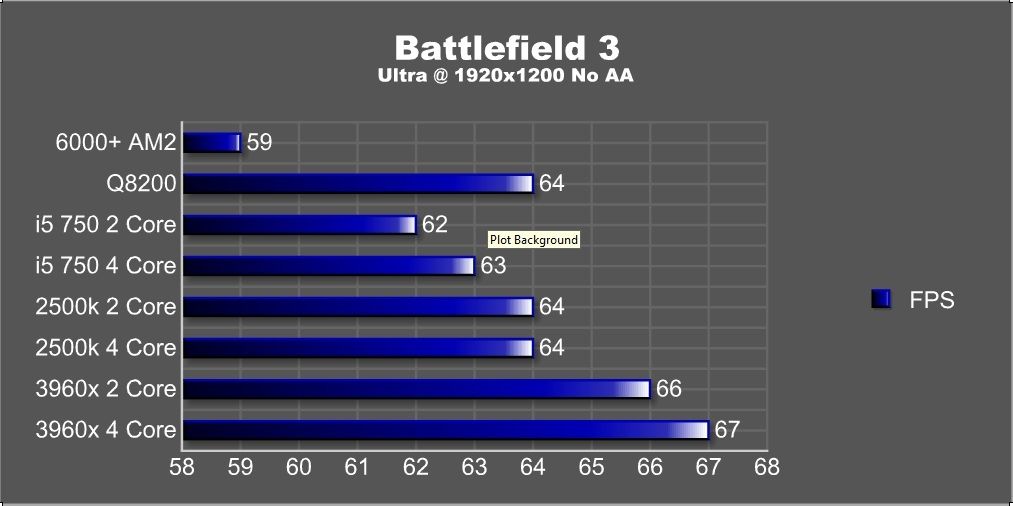

In Battlefield 3 I will be recording data from the beginning of the SP campaign. I will start when he lands on the train, and finish with the gun pointed at me. It isn't

the most demanding at all, but it is in my opinion the most balanced way I can do this because there is no formal benchmark. Again, I will have the settings at Ultra but

not Max. Anti-Aliasing is relevant to how powerful your GPU is rather than your CPU.

For as many times as I heard "Battlefield 3 takes advantage of all cores" this really proves otherwise. Even at Ultra settings the very old 6000+ dual core performs at an

almost perfect frame rate of 60FPS. I think the minimal (1 to 2%) increase in performance isn't worth the extra cash it takes to purchase a quad. So if you are on a budget,

it is pretty pointless to go AMD FX (especially the 6 or 8 core) because if you got an Ivy Bridge i3 it would probably perform the same or better than my 2500k pictured

here. These 2 benches also prove that there is absolutely no reason to go SB-E. It isn't worth it to spend 800 dollars more for 2FPS.

Here is where I talk about lower resolutions. As we know it, the higher the res, the higher the eye candy, the more GPU power we need. Something to think about here, is if

you have a lower resolution monitor then it requires more CPU power. The reason for this, is the GPU has to do less work to render lower resolution frames therefor seeks

frames faster giving the CPU more of a workout to setup frames faster. This is as simple as I can make it. At higher resolutions the GPU takes longer to process frames so

the CPU has to give frames slower. On the other hand, if you want SLI or Crossfire, you need to have an overclocked CPU capable of handling double the work load because the

CPU now has to process 25-50% more frames than usual and then drivers split the workload between your GPUs. The rest of that can get pretty technical so we will leave it at

that.

With all that being said, if you already have something like a C2D E8400 or above, or something in the Phenom 2 area then most likely the CPU isn't holding you back unless

you have a dual GPU setup, or you're trying to run tons of extra eye candy. If you don't have a steep enough budget to afford an Intel quad, then think about grabbing an

Ivy Bridge dual core. Or, if you really can't afford that, try finding a used Phenom 2 Quad or dual core. Clock for clock Phenom 2 is faster at games than the FX series and

you can find them pretty cheap now. Because as it shows, you don't need a powerhouse quad to run Battlefield 3 or Skyrim. Another thing I should touch briefly is Haswell, i7's, and HT. No, HT doesn't help gaming. Rather, it can hamper gaming performance sometimes. Since an i7 is identical to an i5 without HT (besides 1 or 2MB of cache) then paying the extra cash is pointless. Another thing I ran into recently is overclocking. Every chip is binned differently, and every setup is different. There is no guarantee that an i7 will overclock more than an i5. Most of the time, the extra cache and HT cause the i7 to be hotter resulting in lower overclocks. It just depends entirely on luck and your ability to OC. Finally, Haswell isn't supposed to drop until June next year or later. If you already have a SB or IB chip then there is no point in upgrading. Also, Haswell will be a different socket and different platform. There will be no upgrading to Haswell with your current Z77 board.

To finish this topic up, I am going to touch briefly on RAM. For those of you who think you need 16GB of RAM or more in a gaming rig, you are highly mistaken. Before I go

in to detail, you must understand that 90% of games being spit out by developers today are strictly console ports. With that being said, we all know that consoles have very

little for real resources, and came out around 2005 when PC gaming was finally getting out of its hole. Now on to the beef, no game of today will take more than 3GB of RAM,

not even my highly modded Skyrim takes more than 2.7GB at any given time. The reason for this, is because all games are stuck being developed for a 32bit platform then

ported over to PC and played on rigs that are typically 64bit. So if you stick 16GB of RAM in your machine at most you will use "maybe" 8GB of it, but you have to be

running a ton of stuff in the background to do this. Ok, so future proofing sounds like a plan....but not really. The new consoles are due for 2013/2014 release of which we

will finally see real advances in PC gaming because developers can get out of their cash cow shells. It will still take a while for games to be 64bit native and be able to

utilize anymore than 3GB of RAM at any given time. I have a feeling most games will look similar to what we have today besides the exclusive AAA titles. Another thing to

consider is, DDR4 is supposed to go mainstream some time in 2014 to 2015 meaning if you plan on having your rig for about 3-5 years your next setup will probably be a DDR4

setup. Because of this, you are pretty much wasting a bit of extra cash which could go in to an SSD or extra GPU power which will benefit you now rather than later.

I hope this little guide/review/whatever you want to call it has helped you to make a more informed decision on your gaming rig guts. Just take note, all of this I said is

strictly for gaming rigs. It is also to educate those who want to help provide advice to the new people seeking build help but don't quite grasp what is needed.

were told they need a quad core to play Skyrim and Battlefield 3 which is totally preposterous. Among other games, this may change but these two were the ones that kept

popping up. Keep in mind, some games can utilize 4 cores but it isn't 100%, and no game can utilize more than 4 cores. To clarify, just because you see blips of activity on

all of your cores it doesn't mean the game is utilizing it. That is just Windows balancing loads as best it can.

To get a bit technical, people confuse core count and GHz (speed) with how fast a CPU really is. The CPU architecture plays the biggest role in its processing power. More

cores and more GHz doesn't mean everything. For those of you that remember circa 2002, the Pentium 4 was clipping the 2.5-3GHz range while the AthlonXP chips were stock

below 2GHz. Yet, the Athlon chips seemed to excel over these Pentiums simply because their cycles per clock were faster.

What is cycles per clock? It is how many instructions can be processed per clock per core. The faster the GHz, the more instructions can be processed. Please do not confuse

this with "how many things can be done per core" because it isn't that.

Let us continue, today we have the top dogs of AMD FX 8170, the Intel Core i7 3770k, and the Intel Core i7 3960x. The 3770k is of the 1155 (mainstream) platform and based

on the 3rd Generation Ivy Bridge core. The Intel Core i7 3960x is of the 2011 (Enthusiast) platform and based on the 2nd Generation Sandy Bridge Extreme core. The AMD FX is

of the AM3+ package based on the Family 15h Microarchitecture.

Most people want to believe that the 8 core 3.9GHz AMD monster will make the 3.5GHz 4 core 3770k succumb to its will. That is utterly incorrect. Currently, the Bulldozer

architecture has comparable performance to the first gen Core i CPUs, or rather the i7 920 era, due to low IPC, slower IMC, slower single threaded performance, and it's

module build not being up to par. Actually, due to all of this gaming performance is actually better on the Deneb/Thuban line up (Phenom 2 965/1100t). Because of this they

ramp up clocks to try and make up for performance loss, but in single threaded apps the FX processors lack, which would be your games. The Sandy Bridge Architecture is

roughly 12-15% faster clock for clock compared to Nehalem, and Ivy Bridge roughly 10-12% faster than Sandy Bridge. Where does this put Bulldozer (FX)? 2 gens behind in

performance or roughly 25% slower in games compared to current Intel CPUs.

So you're probably wondering why I haven't mentioned SB-E yet. Well, for starters the only affordable CPU in that line up is the 3820 which is bested in games by the SB

2600k. As I mentioned before, more cores doesn't mean more performance. So in literal sense, there is no point in getting a 3930k or 3960x for gaming.....period. Don't let

terms like quad channel RAM or "extreme" fool you. They don't make a difference in games. Don't believe me? Look at my sig, I speak with experience. So for now, we can

forget this CPU line up since this thread is about gaming.

Moving along, the point of this thread is to show you with graphs and non-fancy words why you don't need more cores for current games. It is also to give you people wanting

to upgrade a feel of where you stand with your current CPU. To see if you need to upgrade that, or if you simply need more GPU horsepower. My main concern as I mentioned

before will be Battlefield 3, and Skyrim. So if you're here looking for benchmarks for other games I hate to disappoint. Besides, these 2 are some of the most demanding and

demanded games. If you have a game in question, more than likely you can judge performance based on these graphs.

These CPUs I will be testing judging performance will be:

Core i7 3960x (2 and 4 core)

Core i5 2500k (2 and 4 core)

Core i5 750 (2 and 4 core)

Core 2 Quad Q8200

AMD AthlonX2 6000+

Unfortunately I currently don't have an i3 from any gen with me at the time but you can guesstimate a gen 1 i3 will be comparable to the 2 core i5 750 benches. I also wish I had a Phenom 2 Quad to throw in there, but the one I "have" is currently sitting in somebodies machine who isn't talking to me.

Each CPU will be tested at stock because I feel that most people who need this thread probably wont overclock. I feel that reviewers who overclock their rigs to show game

performance in tests like these need to re-evaluate what exactly they are trying to show, and who their target audience is. It isn't like benchmarking to find maximum

performance for a new product. Each game will be set to Ultra settings (as always asked for) and at 1920x1200 resolution. Please understand, that by Ultra I mean every in

game setting set as high as it can go but I will NOT be using any extra eye candy such as Anti-Aliasing. Base GPU will be an AMD Radeon 5850. So lets get started.

In Skyrim I will be recording information from the very beginning of the game. It is the most likely time when everything will be exactly the same. Take note, in Skyrim the

most demanding areas will be heavily wooded and areas with a lot of action going on. For example, a dragon fight with many NPC's attacking it with magic. I will also take

this time to mention that my copy of Skyrim is heavily modded. The only mods I use are HD texture mods and no story or game modifiers. To be specific, all the extreme

options you can get off of STEP which can be found over at Nexus. My copy is about 14GB in size, where your vanilla copy will be between 6 and 7GB just to give you an idea

of how large these mods are. With that being said, my copy will tax these CPUs (and more importantly my GPU) more than your copy. I estimate vanilla will be about 15%

faster or more than my copy depending on the CPU in question.

| This image has been resized. Click this bar to view the full image. |

As we can tell from the benchmarks here my copy of Skyrim is really taxing my GPU. The thing to mention here though, is that between 2 generations of CPUs there isn't much

of a difference between them, and 2 or 4 cores. Even the Core 2 Quad is hanging in there with admirable performance granted its age. I think if I didn't have so many high

res texture mods I think the 6000+ would even be quite playable despite its age as well. Next up I borrowed a few pics from another site that shows the same thing. Only

difference here, is they are using a lower resolution which actually adds more stress to the CPU. I'll come to that topic later though.

| This image has been resized. Click this bar to view the full image. |

Their results are similar to mine despite the circumstances and settings used. This next picture is for all of you XP users. It is pointless to hang on to the aging OS and

this proves it.

| This image has been resized. Click this bar to view the full image. |

In Battlefield 3 I will be recording data from the beginning of the SP campaign. I will start when he lands on the train, and finish with the gun pointed at me. It isn't

the most demanding at all, but it is in my opinion the most balanced way I can do this because there is no formal benchmark. Again, I will have the settings at Ultra but

not Max. Anti-Aliasing is relevant to how powerful your GPU is rather than your CPU.

| This image has been resized. Click this bar to view the full image. |

For as many times as I heard "Battlefield 3 takes advantage of all cores" this really proves otherwise. Even at Ultra settings the very old 6000+ dual core performs at an

almost perfect frame rate of 60FPS. I think the minimal (1 to 2%) increase in performance isn't worth the extra cash it takes to purchase a quad. So if you are on a budget,

it is pretty pointless to go AMD FX (especially the 6 or 8 core) because if you got an Ivy Bridge i3 it would probably perform the same or better than my 2500k pictured

here. These 2 benches also prove that there is absolutely no reason to go SB-E. It isn't worth it to spend 800 dollars more for 2FPS.

Here is where I talk about lower resolutions. As we know it, the higher the res, the higher the eye candy, the more GPU power we need. Something to think about here, is if

you have a lower resolution monitor then it requires more CPU power. The reason for this, is the GPU has to do less work to render lower resolution frames therefor seeks

frames faster giving the CPU more of a workout to setup frames faster. This is as simple as I can make it. At higher resolutions the GPU takes longer to process frames so

the CPU has to give frames slower. On the other hand, if you want SLI or Crossfire, you need to have an overclocked CPU capable of handling double the work load because the

CPU now has to process 25-50% more frames than usual and then drivers split the workload between your GPUs. The rest of that can get pretty technical so we will leave it at

that.

With all that being said, if you already have something like a C2D E8400 or above, or something in the Phenom 2 area then most likely the CPU isn't holding you back unless

you have a dual GPU setup, or you're trying to run tons of extra eye candy. If you don't have a steep enough budget to afford an Intel quad, then think about grabbing an

Ivy Bridge dual core. Or, if you really can't afford that, try finding a used Phenom 2 Quad or dual core. Clock for clock Phenom 2 is faster at games than the FX series and

you can find them pretty cheap now. Because as it shows, you don't need a powerhouse quad to run Battlefield 3 or Skyrim. Another thing I should touch briefly is Haswell, i7's, and HT. No, HT doesn't help gaming. Rather, it can hamper gaming performance sometimes. Since an i7 is identical to an i5 without HT (besides 1 or 2MB of cache) then paying the extra cash is pointless. Another thing I ran into recently is overclocking. Every chip is binned differently, and every setup is different. There is no guarantee that an i7 will overclock more than an i5. Most of the time, the extra cache and HT cause the i7 to be hotter resulting in lower overclocks. It just depends entirely on luck and your ability to OC. Finally, Haswell isn't supposed to drop until June next year or later. If you already have a SB or IB chip then there is no point in upgrading. Also, Haswell will be a different socket and different platform. There will be no upgrading to Haswell with your current Z77 board.

To finish this topic up, I am going to touch briefly on RAM. For those of you who think you need 16GB of RAM or more in a gaming rig, you are highly mistaken. Before I go

in to detail, you must understand that 90% of games being spit out by developers today are strictly console ports. With that being said, we all know that consoles have very

little for real resources, and came out around 2005 when PC gaming was finally getting out of its hole. Now on to the beef, no game of today will take more than 3GB of RAM,

not even my highly modded Skyrim takes more than 2.7GB at any given time. The reason for this, is because all games are stuck being developed for a 32bit platform then

ported over to PC and played on rigs that are typically 64bit. So if you stick 16GB of RAM in your machine at most you will use "maybe" 8GB of it, but you have to be

running a ton of stuff in the background to do this. Ok, so future proofing sounds like a plan....but not really. The new consoles are due for 2013/2014 release of which we

will finally see real advances in PC gaming because developers can get out of their cash cow shells. It will still take a while for games to be 64bit native and be able to

utilize anymore than 3GB of RAM at any given time. I have a feeling most games will look similar to what we have today besides the exclusive AAA titles. Another thing to

consider is, DDR4 is supposed to go mainstream some time in 2014 to 2015 meaning if you plan on having your rig for about 3-5 years your next setup will probably be a DDR4

setup. Because of this, you are pretty much wasting a bit of extra cash which could go in to an SSD or extra GPU power which will benefit you now rather than later.

I hope this little guide/review/whatever you want to call it has helped you to make a more informed decision on your gaming rig guts. Just take note, all of this I said is

strictly for gaming rigs. It is also to educate those who want to help provide advice to the new people seeking build help but don't quite grasp what is needed.